In today’s fast-evolving artificial intelligence and analytics world, building a well-structured machine learning (ML) pipeline is essential for deploying robust, scalable, and production-ready ML models. From data collection to model monitoring, an end-to-end ML pipeline ensures that each workflow stage is automated, efficient, and aligned with business goals. Understanding these stages can be complex if you’re stepping into machine learning. That’s why enrolling in a Data Science Course can equip you with the tools and knowledge to build effective pipelines and excel in this dynamic field.

What Is a Machine Learning Pipeline?

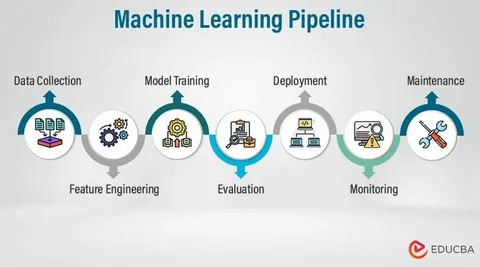

A machine learning pipeline is a series of steps that process data and train models in a sequential, structured manner. Instead of treating each stage — data cleaning, feature engineering, model training, etc. — as a standalone, the pipeline integrates them into a cohesive workflow. This integration ensures reproducibility, scalability, and consistency, especially when working with large datasets or deploying models in real-world applications.

An ML pipeline can be broken down into the following essential components:

- Data Collection

The first step is sourcing relevant data. Data can be collected from databases, web APIs, cloud storage, or web scraping. The quality and volume of data you collect directly impact the success of your ML model. It’s essential to ensure that the data represents the real-world problem you’re trying to solve.

Automation tools and scheduling scripts are often used to collect data periodically. In more advanced pipelines, data ingestion is handled through platforms like Apache Kafka or AWS Kinesis for real-time processing.

- Data Preprocessing and Cleaning

Once the data is collected, it typically needs significant cleaning. This includes:

- Removing missing values or imputing them.

- Handling outliers.

- Converting categorical variables into numerical formats.

- Normalising or standardising features.

This stage is vital because “garbage in, garbage out” holds for machine learning. The more accurate and relevant your data, the more effective your models will be. Python libraries such as Pandas, NumPy, and Scikit-learn provide powerful tools for preprocessing tasks.

3.Feature Engineering

Feature engineering involves creating or transforming new features to make the model more predictive. This can include:

- Creating interaction terms.

- Binning or bucketing numerical values.

- Extracting date-time components (like hour or day of the week).

- Applying logarithmic or polynomial transformations.

Feature engineering must be consistently applied to training and real-time data in production environments, so it’s crucial to automate this process through the pipeline.

- Data Splitting

Before training a model, the dataset is typically split into training, validation, and test sets. This ensures that the model’s performance can be evaluated on unseen data and helps prevent overfitting.

A standard practice is using 70% of the data for training, 15% for validation, and 15% for testing. Libraries like Scikit-learn provide functions like train_test_split() to simplify this step.

- Model Selection and Training

Once the data is ready, the next step is selecting an appropriate algorithm. This could range from simple linear regression models to complex neural networks. During this phase:

- Multiple models may be trained.

- Hyperparameter tuning (using techniques like GridSearchCV or RandomizedSearchCV) is often applied.

- Cross-validation is used to ensure the model performs well across different subsets of the data.

At this point, the pipeline should be able to retrain the model automatically when new data is available.

- Model Evaluation

After training, the model is evaluated using metrics like:

- Accuracy, Precision, Recall, F1-Score (for classification problems)

- RMSE, MAE, R² (for regression problems)

Confusion matrices and ROC curves are also commonly used to visualise model performance. This step ensures that only the best-performing model moves forward to deployment.

- Model Deployment

Once a model passes evaluation, it’s deployed to a production environment where it can make real-time or batch predictions. Deployment can be done through:

- REST APIs using frameworks like Flask or FastAPI

- Cloud platforms like AWS SageMaker, Google AI Platform, or Azure ML

- Docker containers for scalable, portable deployment

Depending on the application, this stage also involves integrating the model with user interfaces, databases, or other systems.

- Monitoring and Maintenance

Deploying the model is not the end. Data distribution can change over time in the real world—a phenomenon known as data drift. Continuous monitoring ensures:

- The model’s predictions remain accurate.

- Latency is within acceptable limits.

- Retraining is triggered when performance degrades.

Tools like MLflow, Prometheus, and Grafana are often used for monitoring and logging.

Benefits of Building an End-to-End Pipeline

- Automation: Saves time and reduces manual errors.

- Reproducibility: Ensures consistent results across experiments.

- Scalability: Facilitates the transition from prototype to production.

- Team Collaboration: Structured pipelines help multiple teams work together efficiently.

Tools and Frameworks for Building Pipelines

Several open-source and commercial tools can help build and manage ML pipelines:

- Scikit-learn Pipelines: Excellent for small to medium projects.

- TensorFlow Extended (TFX): Designed for production-grade deep learning pipelines.

- Kubeflow: Great for orchestrating ML workflows in Kubernetes environments.

- Airflow: Widely used for managing data workflows.

Real-World Applications

Industries such as finance, healthcare, e-commerce, and manufacturing use ML pipelines to:

- Detect fraud in real-time.

- Predict patient readmissions.

- Recommend products to users.

- Optimise supply chain logistics.

By building robust ML pipelines, companies can rapidly scale their AI initiatives and turn insights into action.

Conclusion

An end-to-end machine learning pipeline is more than just a series of scripts—it’s an organised, repeatable workflow that bridges data science and engineering. As organisations strive to deploy AI at scale, mastering pipeline architecture becomes indispensable for any aspiring data scientist. If you’re looking to upskill or start a career in machine learning, enrolling in a data scientist course in Hyderabad can provide hands-on experience in building and deploying real-world pipelines, equipping you for the challenges of tomorrow.

ExcelR – Data Science, Data Analytics and Business Analyst Course Training in Hyderabad

Address: Cyber Towers, PHASE-2, 5th Floor, Quadrant-2, HITEC City, Hyderabad, Telangana 500081

Phone: 096321 56744